Exploring The Undress AI Telegram Bot Name: Concerns And Context

The digital landscape, you know, changes so quickly, and with it come new kinds of technologies that can be a bit surprising. People are talking more and more about things like the undress AI Telegram bot name, and what that even means. It's something that really catches people's attention, and it's pretty important to get a good handle on what's going on with these kinds of tools. We want to help you understand the core ideas around them, and why they’ve become such a talking point, especially on platforms like Telegram, which is, like, a very popular messaging app.

A lot of folks are curious about how artificial intelligence, or AI, is changing what we see online, particularly with images. There's a certain fascination, perhaps, with how AI can alter pictures, sometimes in ways that were just not possible before. This interest, you see, often leads to discussions about specific tools or bots that claim to do very particular things with images, and that’s where the conversation about an undress AI Telegram bot name comes into play. It’s a topic that brings up a lot of questions about technology, privacy, and even what’s right or wrong.

So, we're going to talk about the general idea of these bots, why they seem to pop up, and what the bigger picture looks like. We won't be naming any specific bots or telling you how to find them, because, honestly, our aim is to give you helpful information about the technology itself and the serious issues that come with it. It’s really about understanding the background and the potential impact, you know, rather than focusing on any one particular name, which can change so fast anyway.

Table of Contents

- The Rise of AI Image Manipulation

- The Elusive Undress AI Telegram Bot Name

- Serious Concerns: Ethics and Legality

- Protecting Yourself and Others

- Frequently Asked Questions (FAQ)

- Conclusion

The Rise of AI Image Manipulation

AI has been getting better and better at creating and changing images, which is, honestly, a pretty big deal. What started as simple filters on photos has grown into something far more complex. Now, AI programs can generate entirely new images from scratch, or they can take existing pictures and alter them in ways that are nearly impossible to spot without close inspection. This capability is, you know, a very powerful one, and it's changing how we think about what's real and what's not online. It’s a technology that’s still developing quite a bit, and people are finding new ways to use it all the time.

What AI Can Do Now

Today’s AI can do some truly incredible things with pictures. It can swap faces, change expressions, or even put people into entirely different settings. This is all thanks to advanced algorithms, which are basically sets of rules that computers follow. These algorithms learn from huge amounts of data, which allows them to understand how images are put together and how to make realistic changes. For example, they can learn what a person's body typically looks like, and then, you know, try to generate something similar. This is, in some respects, how things like "deepfakes" are made, where a person's face might be put onto someone else's body in a video or photo. It's a bit mind-boggling, actually, how good these systems have become.

The ability of AI to manipulate images has, perhaps, opened up new creative possibilities for artists and designers. You can see AI-generated art or photo edits that are truly stunning. However, this same technology, as a matter of fact, can also be used for less positive purposes. When AI is applied to alter images of people without their permission, especially in sensitive ways, it crosses a serious line. It's like, a tool that can build amazing things, but it can also be used to cause harm, depending on who is using it and for what reason. This dual nature is something we really need to understand as these technologies become more common.

The Appeal of Bots on Messaging Apps

Messaging apps like Telegram are popular because they are easy to use and often offer a lot of privacy features, or so it seems. This makes them, you know, a very appealing place for all sorts of bots to pop up. Bots are automated programs that can perform tasks, and on Telegram, they can do everything from giving you weather updates to playing games. The appeal of an AI image manipulation bot on such a platform is that it seems very accessible. Users might find it simple to just send an image to a bot and, apparently, get an altered version back without needing special software or computer skills. It removes a lot of the technical hurdles, you know.

This ease of access, however, also presents some significant risks. When these bots are used to create non-consensual imagery, it becomes a very serious problem. The anonymity that some messaging apps offer can also make it harder to track down who is creating or distributing such content. So, while the convenience is, like, a big draw, it’s also what makes these bots concerning when they are used improperly. It’s a bit of a double-edged sword, really, this combination of powerful AI and accessible platforms. People need to be very aware of what they are interacting with, and what the consequences could be.

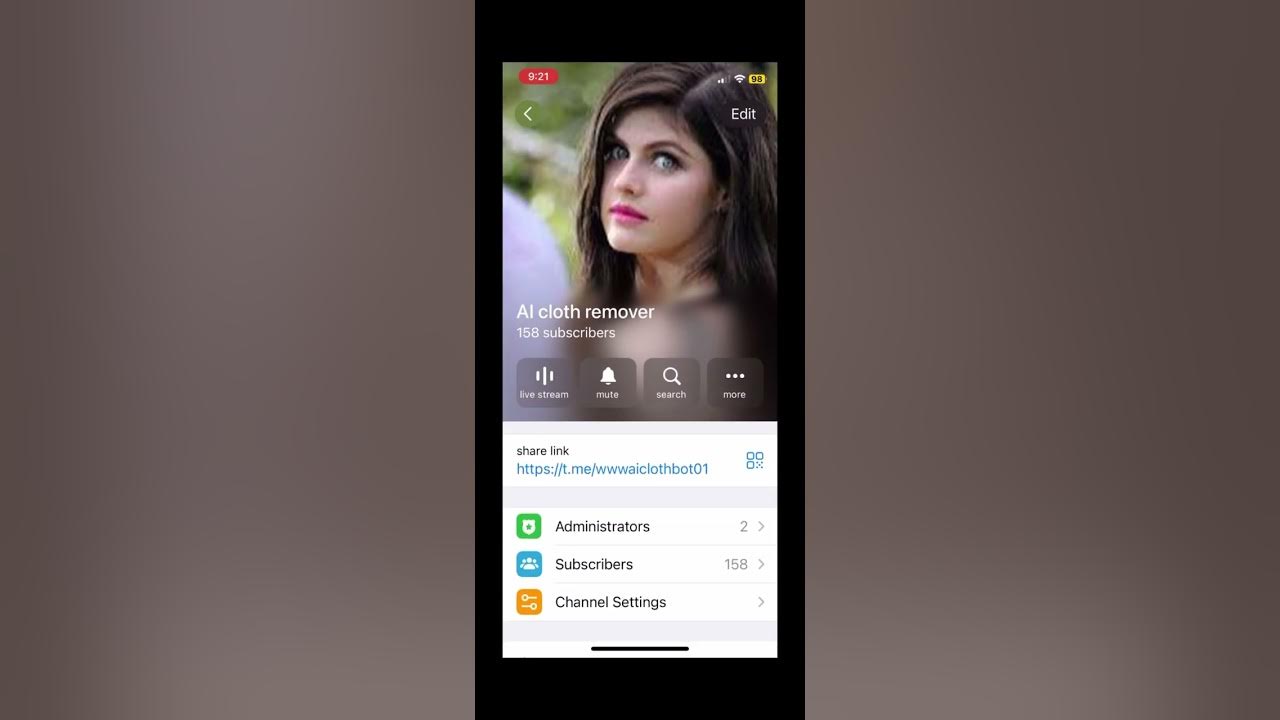

The Elusive Undress AI Telegram Bot Name

When people talk about an "undress AI Telegram bot name," they are usually referring to a bot that claims to remove clothing from images using artificial intelligence. The truth is, there isn't one single, official name for such a bot that stays the same. These bots, you know, tend to appear and disappear pretty quickly. They might change their names, or new ones pop up all the time as older ones get shut down. It's like a game of whack-a-mole, in a way, for the platforms trying to control them. So, trying to find a specific, lasting name is often a bit of a fruitless search, as a matter of fact.

The reason for this constant change is usually because these bots operate in a very grey area, or sometimes, completely outside the law. Platforms like Telegram have rules against content that is harmful or non-consensual. When a bot is identified as violating these rules, it gets removed. But then, it's pretty common for another one to appear under a different name, offering similar capabilities. This makes it, you know, very hard to keep track of any particular "undress AI Telegram bot name" because their existence is often very temporary. It’s a continuous cat-and-mouse situation, really.

Why Names Are Hard to Pin Down

The names of these kinds of bots are hard to pin down for several reasons. For one, the people who create them often want to stay hidden. They might use generic or constantly changing names to avoid detection. This helps them, you know, evade the security measures put in place by platforms. Also, if a bot gains too much attention, it's more likely to be reported and taken down. So, keeping a low profile, or at least a constantly shifting one, is part of their strategy, apparently. It’s like, a deliberate choice to be hard to trace, which is a bit concerning for anyone trying to understand the landscape.

Another reason is the very nature of these operations. They are often run by individuals or small groups, not large, established companies. This means they don't have, you know, a brand or a consistent presence to maintain. They are opportunistic, appearing when they can and disappearing when they must. This makes the idea of a stable "undress AI Telegram bot name" virtually non-existent. You might hear whispers of a name today, but tomorrow, it could be gone, or a new one might have taken its place. It's a very fluid situation, and that makes it tricky to keep up with, honestly.

How These Bots Generally Operate (Without Specifics)

Generally speaking, these types of bots work by taking an image that a user sends them. Then, they use AI algorithms to process that image, attempting to remove clothing or alter it in a similar way. The AI has been trained on a lot of data, which allows it to predict what might be underneath clothing or to generate new parts of an image that weren't there before. It's a complex process, technically, but from the user's perspective, it seems very simple: you send a picture, and you get a changed one back. This is, you know, how many generative AI tools work, just applied to a very specific and often problematic task.

The quality of the altered images can vary a lot. Some might look very fake and obviously manipulated, while others could be surprisingly realistic. This depends on the sophistication of the AI model and the data it was trained on. It’s important to remember that these are not actual photographs of a person without clothes; they are digitally created fakes. They are, in a way, illusions generated by a computer program. So, even if they look convincing, they are not real representations of the person in the original image. This distinction is, like, very important to grasp, especially when considering the implications.

Serious Concerns: Ethics and Legality

The existence of bots that manipulate images in this way raises some very serious ethical and legal concerns. When images are altered without a person's consent, especially to create intimate or revealing content, it's a huge violation of privacy. This kind of misuse of AI technology can cause significant harm to individuals. It’s not just about a picture; it’s about a person's dignity, reputation, and sense of safety. These are, you know, very fundamental human rights that are being undermined. The consequences can be devastating for those targeted, and that's a pretty serious issue, as a matter of fact.

Moreover, the spread of such manipulated images can contribute to a culture where consent is disregarded and where individuals, particularly women, are objectified. This technology, you see, can be used to harass, blackmail, or simply humiliate people. It’s a very dangerous tool in the wrong hands, and its potential for harm is, like, pretty clear. We need to be very mindful of these broader societal impacts, not just the technical aspects. It’s a discussion that goes far beyond just the "undress AI Telegram bot name" itself.

The Issue of Consent

At the heart of the ethical problem with these bots is the complete lack of consent. Creating or sharing intimate images of someone without their permission is a profound violation. It doesn't matter if the image is real or fake; the harm comes from the non-consensual nature of its creation and distribution. People have a right to control their own image and how it's used. When AI is used to strip away that control, it’s a very serious breach of trust and privacy. This is, you know, arguably the most important point to consider when talking about these kinds of technologies. It’s about respecting individual autonomy, essentially.

Even if someone might think it's just a "joke" or "harmless fun," the impact on the person whose image is used can be severe and long-lasting. They might experience emotional distress, reputational damage, or even face real-world threats. So, the issue of consent is, like, absolutely central to understanding why these bots are so problematic. It’s not just a minor detail; it’s the entire foundation of the ethical dilemma. We must, you know, uphold the principle that everyone has the right to decide what happens with their own image, especially when it comes to intimate content.

Legal Ramifications Around the Globe

The legal situation surrounding AI-generated non-consensual intimate imagery is, you know, pretty complex and varies from place to place. Many countries are now recognizing the severe harm caused by deepfakes and similar manipulations, and they are starting to pass laws specifically to address them. For example, some places have made it illegal to create or share such images without consent, even if they are not real. These laws aim to protect individuals from digital sexual violence and harassment. It’s a sign that, you know, governments are taking this threat seriously, which is a good thing, really.

However, because the technology is so new and develops so quickly, laws can sometimes struggle to keep up. There are still challenges in enforcing these laws, especially when the creators of the content are in different countries or are using anonymous platforms. Nevertheless, the trend is towards greater legal accountability for those who create or distribute these harmful images. It's a very active area of legal development, and it's important for everyone to be aware that there can be serious legal consequences for engaging in such activities. So, people should, you know, definitely think twice before getting involved with anything like this.

Impact on Individuals

The impact of non-consensual intimate imagery on individuals can be truly devastating. Victims often experience profound psychological distress, including anxiety, depression, and feelings of humiliation or betrayal. Their personal and professional lives can be severely affected, as these images can spread rapidly online, making it very difficult to control the damage. This kind of experience can, you know, erode a person's sense of safety and trust in others. It's a very personal attack, essentially, that leaves deep emotional scars. The digital nature of the crime doesn't make the pain any less real, as a matter of fact.

Beyond the emotional toll, there can be tangible consequences too. Victims might face harassment, bullying, or even lose their jobs or educational opportunities. The permanence of content online means that these images can resurface years later, causing ongoing trauma. So, it's not just a fleeting moment of harm; it's something that can affect a person for a very long time. This is why it's, like, so important to talk about the dangers of these bots and to support efforts to combat their misuse. We need to remember the real people behind the images, you know, and the pain they might experience.

Protecting Yourself and Others

Given the rise of AI image manipulation, protecting yourself and others means being smart about what you see and share online. It's about developing a healthy skepticism, you know, especially when something seems too good or too shocking to be true. Always think critically about the source of an image and whether it looks authentic. This isn't just about avoiding "undress AI Telegram bot name" situations; it's about being a responsible digital citizen in general. It’s a very important skill in today's digital world, actually, to be able to tell what's real and what's not.

If you come across content that seems suspicious or harmful, you know, don't share it further. Spreading such content, even if you're just trying to show how bad it is, can contribute to the harm. Instead, consider reporting it to the platform where you found it. Taking proactive steps like this can help make the internet a safer place for everyone. It's a collective effort, really, to push back against the misuse of these powerful technologies. So, be mindful of your own actions, and encourage others to do the same.

Being Aware of Digital Fakes

Being aware of digital fakes means understanding that not everything you see online is real, especially when it comes to images and videos. AI technology has made it incredibly easy to create convincing fakes, and these can sometimes be very hard to spot with the naked eye. Look for subtle inconsistencies, like strange lighting, unusual facial features, or awkward body positions. Sometimes, you know, the background might not quite match the person in the foreground. These little details can be clues that something is amiss. It's like being a detective, in a way, looking for tiny discrepancies.

There are also tools and techniques that can help you verify the authenticity of an image, though they are not always foolproof. Reverse image searches can sometimes show if an image has appeared elsewhere online, perhaps in its original form. Staying informed about the latest AI capabilities also helps. The more you understand how these fakes are made, the better equipped you are to recognize them. So, keep learning and stay curious, because, you know, the technology is always changing, and so are the methods of manipulation. It’s a constant learning process, really.

Reporting Misuse

If you encounter content that you suspect is a non-consensual deepfake or other harmful AI-generated imagery, reporting it is a very important step. Most social media platforms and messaging apps have mechanisms for reporting content that violates their terms of service. Look for options like "report," "flag," or "abuse." Providing as much detail as possible in your report can help the platform investigate and take action more quickly. This is, you know, a direct way to combat the spread of such harmful material. It really does make a difference, apparently, when people take the time to report things.

Beyond reporting to the platform, in some cases, you might consider reporting to law enforcement, especially if the content involves a minor or if it's being used for harassment or blackmail. Organizations dedicated to fighting online abuse can also offer support and guidance. For example, you could look for resources from groups that focus on digital rights and safety, as a matter of fact. They often have specific advice on how to handle these situations. It’s important to remember that you're not alone, and there are ways to get help and to take action against this kind of misuse. You can learn more about the impact of AI misuse from reputable sources.

Promoting Digital Literacy

Promoting digital literacy means helping people understand how the internet and digital technologies work, and how to use them safely and responsibly. This includes teaching about the risks of AI manipulation, the importance of privacy, and the concept of digital

The Best NSFW AI Chat & AI Girlfriend without Filters — Stripchats.Ai

Ai clothes remover telegram bot link | undress Ai cloth | Ai clothes

Ai Bot Cloths Remover Telegram Channel Name | Telegram Dress Remover