Beyond The Garden: Unpacking The Real 'Adam' Worth In Today's World

Have you ever stopped to ponder the "net worth" of figures like Adam and Eve? It's a curious thought, isn't it? Perhaps you're picturing ancient treasures or vast orchards. While the idea of biblical figures having a modern-day financial portfolio is, well, a bit whimsical, the question itself hints at something deeper: what truly holds value, and how do we measure it?

For many, the names Adam and Eve conjure images of humanity's earliest beginnings, a foundational story that shapes cultures and beliefs around the globe. Their "worth" in this context isn't about money; it's about their profound impact on our understanding of existence, morality, and the human condition. They represent, in a way, the origin story for so many discussions about life itself, and their legacy, arguably, is priceless.

Yet, there's another "Adam" that holds immense, tangible worth in our current world, particularly in the fast-moving landscape of technology and artificial intelligence. This "Adam" isn't a person from ancient texts, but rather a powerful tool, an algorithm that is, you know, reshaping how machines learn and understand our complex world. So, let's explore this modern "Adam" and see why its value is truly immeasurable.

Table of Contents

- The Original Adam and Eve: A Look at Their Enduring Legacy

- The Modern Adam: Unveiling the Adam Optimization Algorithm

- How Adam Works: A Peek Under the Hood

- Adam's Impact and Its Evolution

- Why Adam Matters in Today's Tech Landscape

- Frequently Asked Questions About Adam and Eve Net Worth

The Original Adam and Eve: A Look at Their Enduring Legacy

When we think about Adam and Eve from ancient stories, their "worth" isn't something you'd find on a balance sheet. Their story, you see, is about beginnings, about choices, and about the very first moments of human experience as many understand it. They are figures of immense symbolic value, deeply embedded in the cultural fabric of countless societies across the globe.

These foundational figures are, in a way, central to discussions about the origin of things like sin and death, as mentioned in various ancient texts. People often debate who was the first to make a mistake, Adam or Eve, and even in antiquity, there were different arguments altogether. Some, it seems, even considered whether Adam or Cain committed the first significant misstep, which is a rather interesting historical point of view.

The wisdom of Solomon, for instance, is one text that expresses views related to these ancient narratives. Their enduring presence in stories and teachings, more or less, gives them a cultural "net worth" that transcends any material possessions. It's a worth tied to narrative, to morality, and to the very questions we ask about where we come from and why we are here.

The Modern Adam: Unveiling the Adam Optimization Algorithm

Now, shifting gears quite a bit, there's another "Adam" that has, actually, accumulated a different kind of "net worth" in our contemporary world. This is the Adam optimization algorithm, a truly significant advancement in the field of machine learning. It's a method that helps train complex artificial intelligence models, especially those used in deep learning, and it was proposed by D.P. Kingma and J.Ba back in 2014.

This Adam algorithm, in essence, combines the best features of other well-known optimization methods, specifically Momentum (often called SGDM) and RMSprop. It's like taking the most effective parts of two powerful tools and putting them together to create something even better. This combination has, you know, been a game-changer for many researchers and developers working with AI.

Before Adam came along, training neural networks often faced a bunch of tricky issues. Things like needing to pick just the right learning rate, or getting stuck in spots where the progress was really slow, were common problems. Adam, pretty much, came in and offered a smart way to get around these difficulties, making the whole process of teaching machines much smoother and more efficient.

How Adam Works: A Peek Under the Hood

So, how does this modern Adam actually work its magic? Well, it's quite different from older methods like traditional stochastic gradient descent (SGD). SGD, basically, uses a single, unchanging learning rate for all the adjustments it makes during training. That learning rate, often called alpha, stays the same from start to finish, which can be a bit rigid.

Adam, however, is much more adaptable. It calculates what are called the "first-order moment estimate" and the "second-order moment estimate" of the gradients. What this means, in a way, is that Adam keeps track of both the average direction of the gradients and the average of their squared values. These estimates then help it create independent, adaptive learning rates for each and every parameter in the model, which is a really clever approach.

This adaptive learning rate feature is a key reason why Adam has become so popular. It means the algorithm can, in some respects, adjust how quickly it learns for different parts of the model, speeding up progress where it's needed and slowing down where things might get unstable. It's a bit like having a smart tutor who knows exactly how much to push you on each subject, rather than giving everyone the same homework schedule.

The algorithm's creators, in fact, described it as combining the advantages of both Momentum and RMSprop. Momentum helps speed up gradients that point in consistent directions, while RMSprop helps adjust learning rates based on the magnitude of recent gradients. Adam brings these two powerful ideas together, making it, arguably, one of the most effective optimization methods available today for deep learning models.

Adam's Impact and Its Evolution

Adam's introduction in 2014 was a significant moment for deep learning, truly. It quickly became a widely used method for optimizing machine learning algorithms, especially in complex deep learning models. Its ability to accelerate convergence in challenging optimization problems, even with very large datasets and many parameters, was a huge benefit.

<

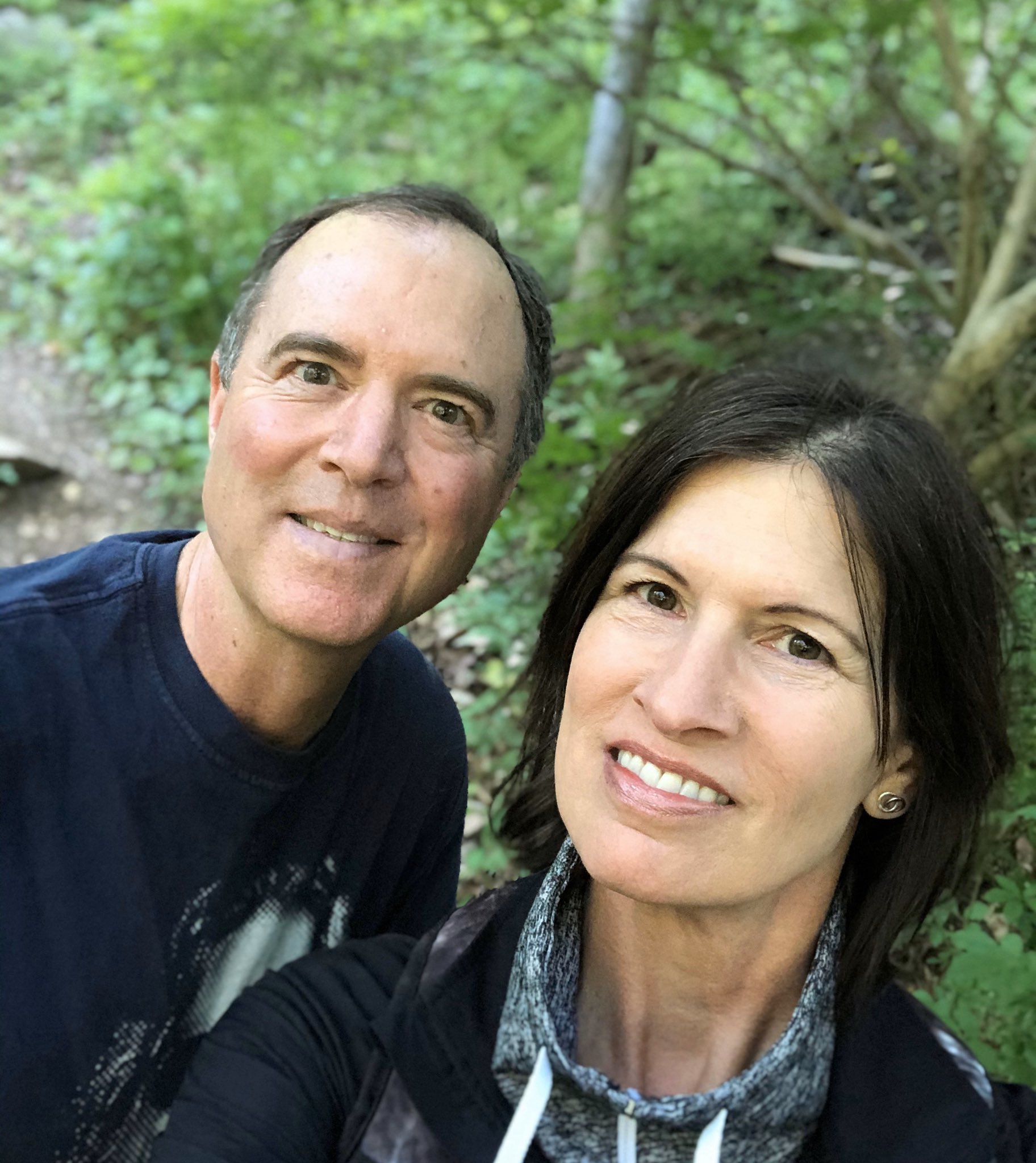

Adam Schiff's wife – Eve Schiff's Net Worth, Parents, Family

Adam Schiff's wife – Eve Schiff's Net Worth, Parents, Family

Adam and Eve HD — Creitz Illustration Studio