AI Undressing: Understanding The Ethical Challenges Of Digital Image Manipulation

The digital world keeps changing quickly, and it's almost like magic how new tools appear. We see artificial intelligence, or AI, making things that used to be impossible, you know? From helping us sort through lots of written information to creating images that look incredibly real, AI's capabilities are really growing. But, with these amazing abilities, there are also some serious things we need to think about, especially when AI is used to create or alter pictures in ways that might cause harm. One of these sensitive areas, arguably, is what some folks call "AI undressing." It's a topic that brings up a lot of questions about privacy, consent, and how we want technology to behave.

This idea of "AI undressing" really highlights a bigger discussion about the ethical responsibilities of those who build these systems and those who use them. It's not just about the technical parts of how AI works, but more about the human impact. When AI can change what a picture shows, especially if it's done without someone's permission, that's a big deal. It can affect people deeply, causing distress and spreading false information, so we need to be very aware of this.

So, this article is here to shed some light on this important issue. We'll talk about what "AI undressing" means in a broader sense, why digital consent is so incredibly important, and what steps we can take to make sure AI is used for good, not for harm. We'll explore how society, and the people creating these tools, can work together to build a safer digital space for everyone, you know, because that's what truly matters.

Table of Contents

- What is AI Undressing, Anyway?

- The Real-World Impact: Why Digital Consent Matters

- The Broader Picture: AI Ethics and Responsibility

- Addressing the Challenge: What Can Be Done?

- Frequently Asked Questions About AI Image Manipulation

What is AI Undressing, Anyway?

When people talk about "AI undressing," they are generally referring to the use of artificial intelligence tools to alter images of people, often to make it appear as though they are not wearing clothes, even when they were fully clothed in the original picture. This is a specific kind of digital image manipulation, and it's part of a larger category known as deepfakes. Basically, AI systems can learn from lots of pictures and then apply that knowledge to create new, very convincing images or videos, so it's quite a sophisticated process.

These AI systems, which are pretty complex, use what's called generative adversarial networks, or GANs, and other advanced techniques to produce these altered images. They can, in a way, fill in details or change appearances based on what they've learned from vast amounts of data. It's the same kind of technology that allows AI to do things like classify text or help us with our daily tasks, just applied in a different, and sometimes problematic, visual context. You know, the underlying AI capability is very powerful.

The core issue with "AI undressing" isn't the technology itself, but how it's used. Just like a tool can build something amazing, it can also, sadly, be used to cause harm. The ability of AI to create realistic images means that pictures can be made to show things that never happened, and that's where the ethical questions really come into play. It's a clear example of how the advancements in AI, while often beneficial, also bring new responsibilities for everyone involved, from the creators to the everyday users, you know, we all have a part to play.

It's important to recognize that this isn't just a theoretical problem; it's something that affects real people. The ease with which these images can be generated and shared means that the potential for misuse is significant. This is why discussions around "AI developed with wisdom," as called for by leaders like Ben Vinson III, are so important. We need to make sure that as AI systems get better at things like understanding our world visually, they are also built with strong ethical boundaries, because that's what truly makes them helpful.

The Real-World Impact: Why Digital Consent Matters

The creation of "AI undressing" images, especially without the subject's permission, carries very serious real-world consequences. It's not just a digital trick; it's a violation of a person's privacy and dignity. When someone's image is manipulated in this way, it can have a lasting impact on their emotional well-being and reputation. This is where the idea of digital consent becomes absolutely vital, you know, it's about respecting people's boundaries in the online space.

A Breach of Trust and Privacy

At its heart, "AI undressing" without consent is a profound breach of trust and privacy. People share images of themselves online, often with the expectation that those images will be used respectfully. When AI is used to alter these pictures in a non-consensual way, it shatters that trust. It makes people feel exposed and vulnerable, like their personal space has been invaded. This feeling of having one's image used against their will is deeply distressing, and it's something we should all be concerned about, honestly.

This kind of manipulation can lead to significant emotional distress for the individuals involved. It can cause anxiety, humiliation, and even depression. It also creates a chilling effect, making people less likely to share their lives online, which can, in a way, limit self-expression and connection. The harm isn't just about the image itself; it's about the feeling of losing control over one's own identity and how they are seen in the world, so it's a very personal issue.

The privacy aspect extends beyond just the individual. When AI can create such convincing fakes, it makes it harder for anyone to trust what they see online. This erodes the general sense of digital safety for everyone, because if you can't tell what's real and what's not, then the whole system feels less secure. It’s like a "hidden failure" in our digital trust systems, a point that our text mentions in the context of AI freeing developers to focus on ethics. We really need to address these issues head-on, you know, for the sake of everyone's peace of mind.

The Spread of Misinformation and Harmful Content

Beyond the individual impact, "AI undressing" contributes to the broader problem of misinformation and the spread of harmful content online. These manipulated images can be shared widely and quickly, making it difficult to control their distribution. Once an image is out there, it's incredibly hard to take back, and the damage can be done almost instantly. This rapid spread is a significant challenge for platforms and individuals alike, so it's a very fast-moving problem.

The existence of such realistic fakes also makes it harder for people to tell the difference between what's real and what's not. This can lead to confusion and a general distrust of visual media. In a world where we rely so much on images and videos for information, this erosion of trust is quite dangerous. It means that people might believe things that aren't true, or dismiss things that are, which can have serious consequences for public discourse and even personal relationships, you know, it's a slippery slope.

This situation also raises questions about accountability. Who is responsible when these images are created and shared? Is it the person who made the image, the platform that hosts it, or the AI system itself? These are complex questions that society is currently grappling with. It highlights the need for AI systems that can, in a way, "actively refuse answering a question unless you tell it that it's ok," as our text hints at with AI's ability to refuse certain actions. This means building in ethical checks from the very beginning, because that's how we protect people.

The Broader Picture: AI Ethics and Responsibility

The challenges posed by "AI undressing" are really just one piece of a much larger puzzle: the need for strong AI ethics and a sense of responsibility among all who interact with this technology. As AI systems become more powerful and more integrated into our lives, it becomes increasingly important to think about the moral implications of their use. This isn't just about preventing harm; it's about making sure AI truly serves humanity in a positive way, you know, for the common good.

Our own information points to the idea that "new systems for checking their reliability are more" needed as large language models dominate our lives. This applies just as much to visual AI as it does to text-based AI. We need ways to ensure these systems are reliable not just in their technical output, but in their ethical behavior. It's about building safeguards into the very core of AI design, so that misuse is actively prevented, because that's the responsible path forward.

Building AI with Wisdom and Safeguards

A key part of addressing these issues is to build AI with wisdom, as Ben Vinson III, president of Howard University, so compellingly suggested. This means that developers and researchers need to consider the potential for misuse from the very beginning of the design process. It's not enough to just make AI that works; it needs to work responsibly and ethically. This involves creating systems that have built-in limitations and protections against generating harmful content, so it's a thoughtful process.

This also ties into the idea of training "more reliable reinforcement learning models," as mentioned in our background text. If AI can be trained to handle complex tasks with variability without introducing "hidden failures," then surely it can also be trained to recognize and refuse to create content that violates ethical guidelines. It’s about teaching the AI not just what to do, but what *not* to do, especially when it comes to sensitive personal data and images. This kind of ethical programming is absolutely essential, you know, for a safe digital future.

Furthermore, there needs to be transparency in how these AI systems are developed and deployed. Users should have some idea of how an AI system might affect their privacy or their image. This openness helps to build trust and allows for public discussion about the boundaries of AI use. It's about creating a dialogue between the creators of AI and the people who are affected by it, so that everyone can have a say in how this technology shapes our world, because that's how progress happens.

User Awareness and Digital Literacy

While developers have a big role to play, users also have a responsibility to be aware and digitally literate. This means understanding how AI works, recognizing the signs of manipulated content, and being cautious about what we share and what we believe online. Education is a powerful tool in this fight against misuse. Knowing how to spot a deepfake, for example, can protect you and others from falling victim to misinformation. It's like having a superpower in the digital age, you know, being able to tell fact from fiction.

For instance, look for inconsistencies in lighting, strange blurs, or unnatural movements in videos. Sometimes, the audio might not quite match the visuals, or the person's blinking patterns seem off. These little clues can often tell you that something isn't quite right with an image or video. Learning these things helps everyone become a better, more responsible digital citizen. It's about being smart about what you consume and share online, so that you're not accidentally contributing to a problem, really.

It's also important to think twice before sharing any image or video that seems questionable, especially if it involves someone in a compromising situation. If you're not sure if something is real, it's always better not to share it. This simple act can help prevent the spread of harmful content. Remember, every share can amplify the impact, so being mindful of what you put out there is a crucial step in protecting others and fostering a healthier online environment, because that's what good digital citizenship looks like.

You can learn more about AI ethics on our site, which offers a broader perspective on responsible AI development and use. Staying informed is, in a way, your best defense in this evolving digital landscape.

Addressing the Challenge: What Can Be Done?

Tackling the issue of "AI undressing" and similar forms of digital image manipulation requires a multi-faceted approach. It's not just one solution that will fix everything; rather, it needs efforts from technology creators, policymakers, and everyday users working together. This is a complex problem, but there are definite steps we can take to make things better and safer for everyone online, you know, it's a shared responsibility.

Technological Solutions and Countermeasures

One important area of focus is developing new technologies to counter the misuse of AI. Just as AI can create these manipulated images, it can also be used to detect them. Researchers are working on advanced detection tools that can identify the subtle digital fingerprints left by AI generation. These tools are getting better all the time, and they offer a promising way to flag or remove harmful content before it spreads too widely, so that's a good thing.

Another approach is the use of watermarking or digital signatures. This involves embedding invisible codes into original images or videos that can verify their authenticity. If an image is altered without permission, these codes would be broken or changed, making it clear that the content has been tampered with. This could give individuals more control over their digital likeness and help platforms identify fakes. It's a bit like a digital ID for your pictures, you know, a way to prove they're yours and untouched.

Some AI developers are also implementing safeguards within their own systems to prevent the generation of non-consensual or harmful content. This could mean training AI models with ethical guidelines that prohibit the creation of explicit or manipulated images of real people. It's about teaching the AI what is acceptable and what is not, right from the start. This kind of proactive approach is really important for building responsible AI, because it prevents problems before they even begin.

Legal and Policy Frameworks

Technology alone can't solve everything; strong legal and policy frameworks are also crucial. Governments and international bodies are starting to recognize the need for laws that specifically address the creation and sharing of non-consensual deepfakes, including "AI undressing." These laws aim to provide victims with legal recourse and hold those who create and distribute such content accountable for their actions. It's about giving victims a way to fight back, you know, and seeking justice.

Different countries are exploring various legal approaches, from criminalizing the creation of such images to allowing victims to sue for damages. The challenge is often keeping pace with the rapid advancements in AI technology. Laws need to be flexible enough to cover new forms of manipulation as they emerge. It's a constant race between innovation and regulation, but having clear legal consequences is a powerful deterrent, so it's a vital step.

Platform policies also play a significant role. Social media companies and other online platforms need to have clear rules against the sharing of non-consensual manipulated content and enforce those rules consistently. This means having effective reporting mechanisms and swift action to remove harmful images. It's about creating a safer online environment by making it clear that such content is not welcome. This kind of firm stance is really important for protecting users, because that's what platforms are supposed to do.

Fostering a Culture of Ethical AI Use

Ultimately, a long-term solution involves fostering a culture where ethical AI use is the norm, not the exception. This means ongoing education for everyone—from young students learning about digital citizenship to professionals developing cutting-edge AI. It’s about instilling a sense of responsibility and empathy in how we interact with technology and each other online. This shift in mindset is, arguably, the most powerful tool we have for positive change, you know, it's about changing hearts and minds.

Encouraging open discussions about AI ethics in schools, universities, and workplaces can help raise awareness and promote critical thinking. When people understand the potential harms, they are more likely to make responsible choices. It's about building a community that values digital safety and respect. This collective effort is essential for creating a future where AI serves humanity in truly beneficial ways, without causing unintended harm, so it's a big undertaking.

Furthermore, supporting research into ethical AI, as explored by institutions like MIT when they look at environmental and sustainability implications of generative AI, is crucial. This includes not just technical solutions, but also understanding the societal impact and developing frameworks for responsible deployment. It's about looking at the big picture and making sure that our technological progress aligns with our human values. This holistic view is really important for navigating the future of AI, because it touches every part of our lives.

Check out our other articles on digital safety for more tips on protecting yourself and others online. It's a great resource, really, for staying informed.

Frequently Asked Questions About AI Image Manipulation

How can I tell if an image has been manipulated by AI?

It can be quite tricky to spot AI-generated fakes, as the technology gets better all the time. However, there are often subtle clues, you know? Look for unusual distortions in the background, strange lighting that doesn't quite make sense, or unnatural textures on skin or clothing. Sometimes, things like inconsistent shadows, odd reflections in eyes, or even strange patterns in hair can give it away. Also, if a person's hands or ears look a bit off, that can be a sign. Very often, the details just don't add up perfectly.

What should I do if I find a non-consensual AI-manipulated image of myself or someone I know?

If you discover a non-consensual AI-manipulated image, the first step is to report it to the platform where you found it. Most social media sites and image-sharing platforms have specific reporting mechanisms for harmful content. You should also consider collecting evidence, like screenshots and URLs, before the content might be removed. Depending on where you live, you might also have legal options, so speaking with a legal professional could be a good idea. Remember, you're not alone in this, and help is available, really.

Are there laws against AI undressing or deepfakes?

Laws against AI undressing and deepfakes are still developing, but many regions are starting to put them in place. Some places have specific laws against non-consensual intimate imagery, which can apply to AI-generated content. Other areas are enacting new legislation specifically targeting deepfakes that create false and harmful depictions of individuals. It varies quite a bit by country and even by state or province, so it's a good idea to check the laws in your specific location. This is an area that's getting a lot of attention from lawmakers, so things are changing pretty quickly.

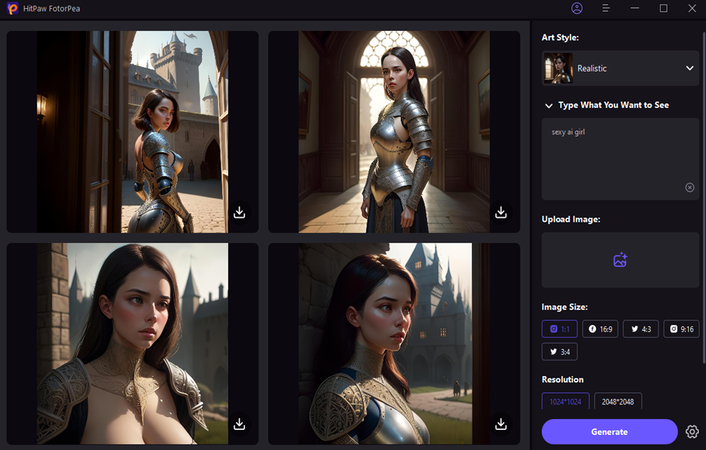

Top 6 Undress AI Tools: A Comprehensive Introduction

opencv_transform/dress_to_correct.py · Rooc/Undress-AI at main

![[OFFICIAL] Top 6 Undress AI Tools: A Comprehensive Introduction](https://images.hitpaw.com/topics/photo-ai/ai-undresser-1.jpg)

[OFFICIAL] Top 6 Undress AI Tools: A Comprehensive Introduction